Note

Go to the end to download the full example code.

Simple Growing Container Example#

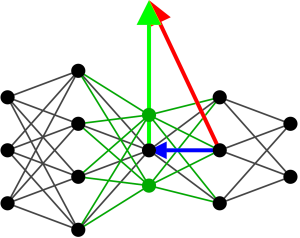

This example shows how to instantiate a model with growing layers.

# Authors: Theo Rudkiewicz <theo.rudkiewicz@inria.fr>

# Sylvain Chevallier <sylvain.chevallier@universite-paris-saclay.fr>

Setup#

Importing the modules

import torch

from gromo.containers.growing_container import GrowingContainer

from gromo.modules.linear_growing_module import (

LinearGrowingModule,

LinearMergeGrowingModule,

)

from gromo.utils.utils import global_device

Define your model

class GrowingNetwork(GrowingContainer):

def __init__(

self,

in_features: int = 5,

out_features: int = 1,

use_bias: bool = True,

hidden_features: int = 10,

device: torch.device = None,

):

super(GrowingNetwork, self).__init__(

in_features=in_features,

out_features=out_features,

device=device,

)

self.start_module = LinearMergeGrowingModule(

in_features=self.in_features, name="start"

)

self.l1 = LinearGrowingModule(

in_features=self.in_features,

out_features=hidden_features,

use_bias=use_bias,

post_layer_function=torch.nn.ReLU(),

name="l1",

)

self.l2 = LinearGrowingModule(

in_features=hidden_features,

out_features=self.in_features,

name="l2",

use_bias=use_bias,

)

self.res_module = LinearMergeGrowingModule(

in_features=self.in_features,

post_merge_function=torch.nn.ReLU(),

name="res",

)

self.l3 = LinearGrowingModule(

in_features=self.in_features,

out_features=self.out_features,

name="l3",

use_bias=use_bias,

)

self.l4 = LinearGrowingModule(

in_features=self.in_features,

out_features=hidden_features,

post_layer_function=torch.nn.ReLU(),

name="l4",

use_bias=use_bias,

)

self.l5 = LinearGrowingModule(

in_features=hidden_features,

out_features=self.out_features,

name="l5",

use_bias=use_bias,

)

self.end_module = LinearMergeGrowingModule(

in_features=self.out_features, name="end"

)

self.start_module.set_next_modules([self.l1, self.res_module])

self.l1.previous_module = self.start_module

self.l1.next_module = self.l2

self.l2.previous_module = self.l1

self.l2.next_module = self.res_module

self.res_module.set_previous_modules([self.start_module, self.l2])

self.res_module.set_next_modules([self.l3, self.l4])

self.l3.previous_module = self.res_module

self.l3.next_module = self.end_module

self.l4.previous_module = self.res_module

self.l4.next_module = self.l5

self.l5.previous_module = self.l4

self.l5.next_module = self.end_module

self.end_module.set_previous_modules([self.l3, self.l5])

self.set_growing_layers()

def set_growing_layers(self):

self._growing_layers = [

self.start_module,

self.l1,

self.l2,

self.res_module,

self.l3,

self.l4,

self.l5,

self.end_module,

]

def __str__(self, verbose=0):

if verbose == 0:

return super(GrowingNetwork, self).__str__()

else:

txt = [f"{self.__class__.__name__}:"]

for layer in self._growing_layers:

txt.append(layer.__str__(verbose=verbose))

return "\n".join(txt)

def forward(self, x):

x = self.start_module(x)

x1 = self.l1(x)

x1 = self.l2(x1)

x = self.res_module(x + x1)

x1 = self.l3(x)

x = self.l4(x)

x = self.l5(x)

return self.end_module(x + x1)

def start_computing_s_m(self):

for layer in self._growing_layers:

layer.tensor_s.init()

if isinstance(layer, LinearGrowingModule):

layer.tensor_m.init()

layer.store_input = True

layer.store_pre_activity = True

def update_s_m(self):

for layer in self._growing_layers:

if isinstance(layer, LinearGrowingModule):

layer.tensor_m.update()

layer.tensor_s.update()

def pass_s_m(self, input_x, target_y, loss=torch.nn.MSELoss()):

input_x = input_x.to(self.device)

target_y = target_y.to(self.device)

self.zero_grad()

y = self(input_x)

loss_value = loss(y, target_y)

loss_value.backward()

self.update_s_m()

def stop_computing_s_m(self):

for layer in self._growing_layers:

layer.tensor_s.reset()

if isinstance(layer, LinearGrowingModule):

layer.tensor_m.reset()

if isinstance(layer, LinearMergeGrowingModule):

if layer.previous_tensor_s is not None:

layer.previous_tensor_s.reset()

if layer.previous_tensor_m is not None:

layer.previous_tensor_m.reset()

layer.store_input = False

layer.store_pre_activity = False

if __name__ == "__main__":

device = global_device()

net = GrowingNetwork(5, 1, device=device)

x_input = torch.randn(20, 5, device=device)

y = net(x_input)

torch.norm(y).backward()

print(net)

print(net.l1.layer.weight.device)

# from torchinfo import summary

# summary(net, input_size=(1, 5), device=device)

print(net.l1.layer.weight.device)

for layer in net.children():

print(layer.__str__(verbose=2))

net.start_computing_s_m()

print("=" * 80)

for layer in net.children():

print(layer.__str__(verbose=2))

net.end_module.previous_tensor_s.init()

net.end_module.previous_tensor_m.init()

for _ in range(2):

x_input = torch.randn(20, 5)

# net.zero_grad()

# y = net(x_input)

# torch.norm(y).backward()

net.pass_s_m(x_input, torch.zeros(20, 1))

net.end_module.previous_tensor_s.update()

net.end_module.previous_tensor_m.update()

for layer in net.children():

print(layer.__str__(verbose=2))

for layer in net.children():

if isinstance(layer, LinearGrowingModule):

layer.compute_optimal_delta()

for layer in net.children():

print(layer.__str__(verbose=2))

net.stop_computing_s_m()

for layer in net.children():

print(layer.__str__(verbose=2))

GrowingNetwork(

(start_module): LinearMergeGrowingModule module with no previous modules and 2 next modules.

(l1): LinearGrowingModule(LinearGrowingModule(l1))(in_features=5, out_features=10, use_bias=True)

(l2): LinearGrowingModule(LinearGrowingModule(l2))(in_features=10, out_features=5, use_bias=True)

(res_module): LinearMergeGrowingModule module with 2 previous modules and 2 next modules.

(l3): LinearGrowingModule(LinearGrowingModule(l3))(in_features=5, out_features=1, use_bias=True)

(l4): LinearGrowingModule(LinearGrowingModule(l4))(in_features=5, out_features=10, use_bias=True)

(l5): LinearGrowingModule(LinearGrowingModule(l5))(in_features=10, out_features=1, use_bias=True)

(end_module): LinearMergeGrowingModule module with 2 previous modules and no next modules.

)

cpu

cpu

LinearMergeGrowingModule module.

Previous modules : []

Next modules : [LinearGrowingModule(LinearGrowingModule(l1))(in_features=5, out_features=10, use_bias=True), LinearMergeGrowingModule module with 2 previous modules and 2 next modules.]

Post merge function : Identity()

Allow growing : False

Store input : 0

Store activity : 0

Tensor S : S(LinearMergeGrowingModule(start)) tensor of shape (6, 6) with 0 samples

Previous tensor S : None

Previous tensor M : None

LinearGrowingModule(l1) module with 60 parameters.

Layer : Linear(in_features=5, out_features=10, bias=True)

Post layer function : ReLU()

Allow growing : False

Store input : False

self._internal_store_input=False

Store pre-activity : False

self._internal_store_pre_activity=False

Tensor S (internal) : S(LinearGrowingModule(l1)) tensor of shape (6, 6) with 0 samples

Tensor S : S(LinearMergeGrowingModule(start)) tensor of shape (6, 6) with 0 samples

Tensor M : M(LinearGrowingModule(l1)) tensor of shape (6, 10) with 0 samples

Optimal delta layer : None

Extended input layer : None

Extended output layer : None

LinearGrowingModule(l2) module with 55 parameters.

Layer : Linear(in_features=10, out_features=5, bias=True)

Post layer function : Identity()

Allow growing : False

Store input : False

self._internal_store_input=False

Store pre-activity : False

self._internal_store_pre_activity=False

Tensor S (internal) : S(LinearGrowingModule(l2)) tensor of shape (11, 11) with 0 samples

Tensor S : S(LinearGrowingModule(l2)) tensor of shape (11, 11) with 0 samples

Tensor M : M(LinearGrowingModule(l2)) tensor of shape (11, 5) with 0 samples

Optimal delta layer : None

Extended input layer : None

Extended output layer : None

LinearMergeGrowingModule module.

Previous modules : [LinearMergeGrowingModule module with no previous modules and 2 next modules., LinearGrowingModule(LinearGrowingModule(l2))(in_features=10, out_features=5, use_bias=True)]

Next modules : [LinearGrowingModule(LinearGrowingModule(l3))(in_features=5, out_features=1, use_bias=True), LinearGrowingModule(LinearGrowingModule(l4))(in_features=5, out_features=10, use_bias=True)]

Post merge function : ReLU()

Allow growing : False

Store input : 0

Store activity : 0

Tensor S : S(LinearMergeGrowingModule(res)) tensor of shape (6, 6) with 0 samples

Previous tensor S : S[-1](LinearMergeGrowingModule(res)) tensor of shape (11, 11) with 0 samples

Previous tensor M : M[-1](LinearMergeGrowingModule(res)) tensor of shape (11, 5) with 0 samples

LinearGrowingModule(l3) module with 6 parameters.

Layer : Linear(in_features=5, out_features=1, bias=True)

Post layer function : Identity()

Allow growing : False

Store input : False

self._internal_store_input=False

Store pre-activity : False

self._internal_store_pre_activity=False

Tensor S (internal) : S(LinearGrowingModule(l3)) tensor of shape (6, 6) with 0 samples

Tensor S : S(LinearMergeGrowingModule(res)) tensor of shape (6, 6) with 0 samples

Tensor M : M(LinearGrowingModule(l3)) tensor of shape (6, 1) with 0 samples

Optimal delta layer : None

Extended input layer : None

Extended output layer : None

LinearGrowingModule(l4) module with 60 parameters.

Layer : Linear(in_features=5, out_features=10, bias=True)

Post layer function : ReLU()

Allow growing : False

Store input : False

self._internal_store_input=False

Store pre-activity : False

self._internal_store_pre_activity=False

Tensor S (internal) : S(LinearGrowingModule(l4)) tensor of shape (6, 6) with 0 samples

Tensor S : S(LinearMergeGrowingModule(res)) tensor of shape (6, 6) with 0 samples

Tensor M : M(LinearGrowingModule(l4)) tensor of shape (6, 10) with 0 samples

Optimal delta layer : None

Extended input layer : None

Extended output layer : None

LinearGrowingModule(l5) module with 11 parameters.

Layer : Linear(in_features=10, out_features=1, bias=True)

Post layer function : Identity()

Allow growing : False

Store input : False

self._internal_store_input=False

Store pre-activity : False

self._internal_store_pre_activity=False

Tensor S (internal) : S(LinearGrowingModule(l5)) tensor of shape (11, 11) with 0 samples

Tensor S : S(LinearGrowingModule(l5)) tensor of shape (11, 11) with 0 samples

Tensor M : M(LinearGrowingModule(l5)) tensor of shape (11, 1) with 0 samples

Optimal delta layer : None

Extended input layer : None

Extended output layer : None

LinearMergeGrowingModule module.

Previous modules : [LinearGrowingModule(LinearGrowingModule(l3))(in_features=5, out_features=1, use_bias=True), LinearGrowingModule(LinearGrowingModule(l5))(in_features=10, out_features=1, use_bias=True)]

Next modules : []

Post merge function : Identity()

Allow growing : False

Store input : 0

Store activity : 0

Tensor S : S(LinearMergeGrowingModule(end)) tensor of shape (2, 2) with 0 samples

Previous tensor S : S[-1](LinearMergeGrowingModule(end)) tensor of shape (17, 17) with 0 samples

Previous tensor M : M[-1](LinearMergeGrowingModule(end)) tensor of shape (17, 1) with 0 samples

================================================================================

LinearMergeGrowingModule module.

Previous modules : []

Next modules : [LinearGrowingModule(LinearGrowingModule(l1))(in_features=5, out_features=10, use_bias=True), LinearMergeGrowingModule module with 2 previous modules and 2 next modules.]

Post merge function : Identity()

Allow growing : False

Store input : 0

Store activity : 1

Tensor S : S(LinearMergeGrowingModule(start)) tensor of shape (6, 6) with 0 samples

Previous tensor S : None

Previous tensor M : None

LinearGrowingModule(l1) module with 60 parameters.

Layer : Linear(in_features=5, out_features=10, bias=True)

Post layer function : ReLU()

Allow growing : False

Store input : True

self._internal_store_input=False

Store pre-activity : True

self._internal_store_pre_activity=True

Tensor S (internal) : S(LinearGrowingModule(l1)) tensor of shape (6, 6) with 0 samples

Tensor S : S(LinearMergeGrowingModule(start)) tensor of shape (6, 6) with 0 samples

Tensor M : M(LinearGrowingModule(l1)) tensor of shape (6, 10) with 0 samples

Optimal delta layer : None

Extended input layer : None

Extended output layer : None

LinearGrowingModule(l2) module with 55 parameters.

Layer : Linear(in_features=10, out_features=5, bias=True)

Post layer function : Identity()

Allow growing : False

Store input : True

self._internal_store_input=True

Store pre-activity : True

self._internal_store_pre_activity=False

Tensor S (internal) : S(LinearGrowingModule(l2)) tensor of shape (11, 11) with 0 samples

Tensor S : S(LinearGrowingModule(l2)) tensor of shape (11, 11) with 0 samples

Tensor M : M(LinearGrowingModule(l2)) tensor of shape (11, 5) with 0 samples

Optimal delta layer : None

Extended input layer : None

Extended output layer : None

LinearMergeGrowingModule module.

Previous modules : [LinearMergeGrowingModule module with no previous modules and 2 next modules., LinearGrowingModule(LinearGrowingModule(l2))(in_features=10, out_features=5, use_bias=True)]

Next modules : [LinearGrowingModule(LinearGrowingModule(l3))(in_features=5, out_features=1, use_bias=True), LinearGrowingModule(LinearGrowingModule(l4))(in_features=5, out_features=10, use_bias=True)]

Post merge function : ReLU()

Allow growing : False

Store input : 1

Store activity : 2

Tensor S : S(LinearMergeGrowingModule(res)) tensor of shape (6, 6) with 0 samples

Previous tensor S : S[-1](LinearMergeGrowingModule(res)) tensor of shape (11, 11) with 0 samples

Previous tensor M : M[-1](LinearMergeGrowingModule(res)) tensor of shape (11, 5) with 0 samples

LinearGrowingModule(l3) module with 6 parameters.

Layer : Linear(in_features=5, out_features=1, bias=True)

Post layer function : Identity()

Allow growing : False

Store input : True

self._internal_store_input=False

Store pre-activity : True

self._internal_store_pre_activity=False

Tensor S (internal) : S(LinearGrowingModule(l3)) tensor of shape (6, 6) with 0 samples

Tensor S : S(LinearMergeGrowingModule(res)) tensor of shape (6, 6) with 0 samples

Tensor M : M(LinearGrowingModule(l3)) tensor of shape (6, 1) with 0 samples

Optimal delta layer : None

Extended input layer : None

Extended output layer : None

LinearGrowingModule(l4) module with 60 parameters.

Layer : Linear(in_features=5, out_features=10, bias=True)

Post layer function : ReLU()

Allow growing : False

Store input : True

self._internal_store_input=False

Store pre-activity : True

self._internal_store_pre_activity=True

Tensor S (internal) : S(LinearGrowingModule(l4)) tensor of shape (6, 6) with 0 samples

Tensor S : S(LinearMergeGrowingModule(res)) tensor of shape (6, 6) with 0 samples

Tensor M : M(LinearGrowingModule(l4)) tensor of shape (6, 10) with 0 samples

Optimal delta layer : None

Extended input layer : None

Extended output layer : None

LinearGrowingModule(l5) module with 11 parameters.

Layer : Linear(in_features=10, out_features=1, bias=True)

Post layer function : Identity()

Allow growing : False

Store input : True

self._internal_store_input=True

Store pre-activity : True

self._internal_store_pre_activity=False

Tensor S (internal) : S(LinearGrowingModule(l5)) tensor of shape (11, 11) with 0 samples

Tensor S : S(LinearGrowingModule(l5)) tensor of shape (11, 11) with 0 samples

Tensor M : M(LinearGrowingModule(l5)) tensor of shape (11, 1) with 0 samples

Optimal delta layer : None

Extended input layer : None

Extended output layer : None

LinearMergeGrowingModule module.

Previous modules : [LinearGrowingModule(LinearGrowingModule(l3))(in_features=5, out_features=1, use_bias=True), LinearGrowingModule(LinearGrowingModule(l5))(in_features=10, out_features=1, use_bias=True)]

Next modules : []

Post merge function : Identity()

Allow growing : False

Store input : 2

Store activity : 0

Tensor S : S(LinearMergeGrowingModule(end)) tensor of shape (2, 2) with 0 samples

Previous tensor S : S[-1](LinearMergeGrowingModule(end)) tensor of shape (17, 17) with 0 samples

Previous tensor M : M[-1](LinearMergeGrowingModule(end)) tensor of shape (17, 1) with 0 samples

LinearMergeGrowingModule module.

Previous modules : []

Next modules : [LinearGrowingModule(LinearGrowingModule(l1))(in_features=5, out_features=10, use_bias=True), LinearMergeGrowingModule module with 2 previous modules and 2 next modules.]

Post merge function : Identity()

Allow growing : False

Store input : 0

Store activity : 1

Tensor S : S(LinearMergeGrowingModule(start)) tensor of shape (6, 6) with 40 samples

Previous tensor S : None

Previous tensor M : None

LinearGrowingModule(l1) module with 60 parameters.

Layer : Linear(in_features=5, out_features=10, bias=True)

Post layer function : ReLU()

Allow growing : False

Store input : True

self._internal_store_input=False

Store pre-activity : True

self._internal_store_pre_activity=True

Tensor S (internal) : S(LinearGrowingModule(l1)) tensor of shape (6, 6) with 0 samples

Tensor S : S(LinearMergeGrowingModule(start)) tensor of shape (6, 6) with 40 samples

Tensor M : M(LinearGrowingModule(l1)) tensor of shape (6, 10) with 40 samples

Optimal delta layer : None

Extended input layer : None

Extended output layer : None

LinearGrowingModule(l2) module with 55 parameters.

Layer : Linear(in_features=10, out_features=5, bias=True)

Post layer function : Identity()

Allow growing : False

Store input : True

self._internal_store_input=True

Store pre-activity : True

self._internal_store_pre_activity=False

Tensor S (internal) : S(LinearGrowingModule(l2)) tensor of shape (11, 11) with 40 samples

Tensor S : S(LinearGrowingModule(l2)) tensor of shape (11, 11) with 40 samples

Tensor M : M(LinearGrowingModule(l2)) tensor of shape (11, 5) with 40 samples

Optimal delta layer : None

Extended input layer : None

Extended output layer : None

LinearMergeGrowingModule module.

Previous modules : [LinearMergeGrowingModule module with no previous modules and 2 next modules., LinearGrowingModule(LinearGrowingModule(l2))(in_features=10, out_features=5, use_bias=True)]

Next modules : [LinearGrowingModule(LinearGrowingModule(l3))(in_features=5, out_features=1, use_bias=True), LinearGrowingModule(LinearGrowingModule(l4))(in_features=5, out_features=10, use_bias=True)]

Post merge function : ReLU()

Allow growing : False

Store input : 1

Store activity : 2

Tensor S : S(LinearMergeGrowingModule(res)) tensor of shape (6, 6) with 40 samples

Previous tensor S : S[-1](LinearMergeGrowingModule(res)) tensor of shape (11, 11) with 0 samples

Previous tensor M : M[-1](LinearMergeGrowingModule(res)) tensor of shape (11, 5) with 0 samples

LinearGrowingModule(l3) module with 6 parameters.

Layer : Linear(in_features=5, out_features=1, bias=True)

Post layer function : Identity()

Allow growing : False

Store input : True

self._internal_store_input=False

Store pre-activity : True

self._internal_store_pre_activity=False

Tensor S (internal) : S(LinearGrowingModule(l3)) tensor of shape (6, 6) with 0 samples

Tensor S : S(LinearMergeGrowingModule(res)) tensor of shape (6, 6) with 40 samples

Tensor M : M(LinearGrowingModule(l3)) tensor of shape (6, 1) with 40 samples

Optimal delta layer : None

Extended input layer : None

Extended output layer : None

LinearGrowingModule(l4) module with 60 parameters.

Layer : Linear(in_features=5, out_features=10, bias=True)

Post layer function : ReLU()

Allow growing : False

Store input : True

self._internal_store_input=False

Store pre-activity : True

self._internal_store_pre_activity=True

Tensor S (internal) : S(LinearGrowingModule(l4)) tensor of shape (6, 6) with 0 samples

Tensor S : S(LinearMergeGrowingModule(res)) tensor of shape (6, 6) with 40 samples

Tensor M : M(LinearGrowingModule(l4)) tensor of shape (6, 10) with 40 samples

Optimal delta layer : None

Extended input layer : None

Extended output layer : None

LinearGrowingModule(l5) module with 11 parameters.

Layer : Linear(in_features=10, out_features=1, bias=True)

Post layer function : Identity()

Allow growing : False

Store input : True

self._internal_store_input=True

Store pre-activity : True

self._internal_store_pre_activity=False

Tensor S (internal) : S(LinearGrowingModule(l5)) tensor of shape (11, 11) with 40 samples

Tensor S : S(LinearGrowingModule(l5)) tensor of shape (11, 11) with 40 samples

Tensor M : M(LinearGrowingModule(l5)) tensor of shape (11, 1) with 40 samples

Optimal delta layer : None

Extended input layer : None

Extended output layer : None

LinearMergeGrowingModule module.

Previous modules : [LinearGrowingModule(LinearGrowingModule(l3))(in_features=5, out_features=1, use_bias=True), LinearGrowingModule(LinearGrowingModule(l5))(in_features=10, out_features=1, use_bias=True)]

Next modules : []

Post merge function : Identity()

Allow growing : False

Store input : 2

Store activity : 0

Tensor S : S(LinearMergeGrowingModule(end)) tensor of shape (2, 2) with 0 samples

Previous tensor S : S[-1](LinearMergeGrowingModule(end)) tensor of shape (17, 17) with 40 samples

Previous tensor M : M[-1](LinearMergeGrowingModule(end)) tensor of shape (17, 1) with 40 samples

LinearMergeGrowingModule module.

Previous modules : []

Next modules : [LinearGrowingModule(LinearGrowingModule(l1))(in_features=5, out_features=10, use_bias=True), LinearMergeGrowingModule module with 2 previous modules and 2 next modules.]

Post merge function : Identity()

Allow growing : False

Store input : 0

Store activity : 1

Tensor S : S(LinearMergeGrowingModule(start)) tensor of shape (6, 6) with 40 samples

Previous tensor S : None

Previous tensor M : None

LinearGrowingModule(l1) module with 60 parameters.

Layer : Linear(in_features=5, out_features=10, bias=True)

Post layer function : ReLU()

Allow growing : False

Store input : True

self._internal_store_input=False

Store pre-activity : True

self._internal_store_pre_activity=True

Tensor S (internal) : S(LinearGrowingModule(l1)) tensor of shape (6, 6) with 0 samples

Tensor S : S(LinearMergeGrowingModule(start)) tensor of shape (6, 6) with 40 samples

Tensor M : M(LinearGrowingModule(l1)) tensor of shape (6, 10) with 40 samples

Optimal delta layer : Linear(in_features=5, out_features=10, bias=True)

Extended input layer : None

Extended output layer : None

LinearGrowingModule(l2) module with 55 parameters.

Layer : Linear(in_features=10, out_features=5, bias=True)

Post layer function : Identity()

Allow growing : False

Store input : True

self._internal_store_input=True

Store pre-activity : True

self._internal_store_pre_activity=False

Tensor S (internal) : S(LinearGrowingModule(l2)) tensor of shape (11, 11) with 40 samples

Tensor S : S(LinearGrowingModule(l2)) tensor of shape (11, 11) with 40 samples

Tensor M : M(LinearGrowingModule(l2)) tensor of shape (11, 5) with 40 samples

Optimal delta layer : Linear(in_features=10, out_features=5, bias=True)

Extended input layer : None

Extended output layer : None

LinearMergeGrowingModule module.

Previous modules : [LinearMergeGrowingModule module with no previous modules and 2 next modules., LinearGrowingModule(LinearGrowingModule(l2))(in_features=10, out_features=5, use_bias=True)]

Next modules : [LinearGrowingModule(LinearGrowingModule(l3))(in_features=5, out_features=1, use_bias=True), LinearGrowingModule(LinearGrowingModule(l4))(in_features=5, out_features=10, use_bias=True)]

Post merge function : ReLU()

Allow growing : False

Store input : 1

Store activity : 2

Tensor S : S(LinearMergeGrowingModule(res)) tensor of shape (6, 6) with 40 samples

Previous tensor S : S[-1](LinearMergeGrowingModule(res)) tensor of shape (11, 11) with 0 samples

Previous tensor M : M[-1](LinearMergeGrowingModule(res)) tensor of shape (11, 5) with 0 samples

LinearGrowingModule(l3) module with 6 parameters.

Layer : Linear(in_features=5, out_features=1, bias=True)

Post layer function : Identity()

Allow growing : False

Store input : True

self._internal_store_input=False

Store pre-activity : True

self._internal_store_pre_activity=False

Tensor S (internal) : S(LinearGrowingModule(l3)) tensor of shape (6, 6) with 0 samples

Tensor S : S(LinearMergeGrowingModule(res)) tensor of shape (6, 6) with 40 samples

Tensor M : M(LinearGrowingModule(l3)) tensor of shape (6, 1) with 40 samples

Optimal delta layer : Linear(in_features=5, out_features=1, bias=True)

Extended input layer : None

Extended output layer : None

LinearGrowingModule(l4) module with 60 parameters.

Layer : Linear(in_features=5, out_features=10, bias=True)

Post layer function : ReLU()

Allow growing : False

Store input : True

self._internal_store_input=False

Store pre-activity : True

self._internal_store_pre_activity=True

Tensor S (internal) : S(LinearGrowingModule(l4)) tensor of shape (6, 6) with 0 samples

Tensor S : S(LinearMergeGrowingModule(res)) tensor of shape (6, 6) with 40 samples

Tensor M : M(LinearGrowingModule(l4)) tensor of shape (6, 10) with 40 samples

Optimal delta layer : Linear(in_features=5, out_features=10, bias=True)

Extended input layer : None

Extended output layer : None

LinearGrowingModule(l5) module with 11 parameters.

Layer : Linear(in_features=10, out_features=1, bias=True)

Post layer function : Identity()

Allow growing : False

Store input : True

self._internal_store_input=True

Store pre-activity : True

self._internal_store_pre_activity=False

Tensor S (internal) : S(LinearGrowingModule(l5)) tensor of shape (11, 11) with 40 samples

Tensor S : S(LinearGrowingModule(l5)) tensor of shape (11, 11) with 40 samples

Tensor M : M(LinearGrowingModule(l5)) tensor of shape (11, 1) with 40 samples

Optimal delta layer : Linear(in_features=10, out_features=1, bias=True)

Extended input layer : None

Extended output layer : None

LinearMergeGrowingModule module.

Previous modules : [LinearGrowingModule(LinearGrowingModule(l3))(in_features=5, out_features=1, use_bias=True), LinearGrowingModule(LinearGrowingModule(l5))(in_features=10, out_features=1, use_bias=True)]

Next modules : []

Post merge function : Identity()

Allow growing : False

Store input : 2

Store activity : 0

Tensor S : S(LinearMergeGrowingModule(end)) tensor of shape (2, 2) with 0 samples

Previous tensor S : S[-1](LinearMergeGrowingModule(end)) tensor of shape (17, 17) with 40 samples

Previous tensor M : M[-1](LinearMergeGrowingModule(end)) tensor of shape (17, 1) with 40 samples

LinearMergeGrowingModule module.

Previous modules : []

Next modules : [LinearGrowingModule(LinearGrowingModule(l1))(in_features=5, out_features=10, use_bias=True), LinearMergeGrowingModule module with 2 previous modules and 2 next modules.]

Post merge function : Identity()

Allow growing : False

Store input : False

Store activity : 0

Tensor S : S(LinearMergeGrowingModule(start)) tensor of shape (6, 6) with 0 samples

Previous tensor S : None

Previous tensor M : None

LinearGrowingModule(l1) module with 60 parameters.

Layer : Linear(in_features=5, out_features=10, bias=True)

Post layer function : ReLU()

Allow growing : False

Store input : False

self._internal_store_input=False

Store pre-activity : False

self._internal_store_pre_activity=False

Tensor S (internal) : S(LinearGrowingModule(l1)) tensor of shape (6, 6) with 0 samples

Tensor S : S(LinearMergeGrowingModule(start)) tensor of shape (6, 6) with 0 samples

Tensor M : M(LinearGrowingModule(l1)) tensor of shape (6, 10) with 0 samples

Optimal delta layer : Linear(in_features=5, out_features=10, bias=True)

Extended input layer : None

Extended output layer : None

LinearGrowingModule(l2) module with 55 parameters.

Layer : Linear(in_features=10, out_features=5, bias=True)

Post layer function : Identity()

Allow growing : False

Store input : False

self._internal_store_input=False

Store pre-activity : False

self._internal_store_pre_activity=False

Tensor S (internal) : S(LinearGrowingModule(l2)) tensor of shape (11, 11) with 0 samples

Tensor S : S(LinearGrowingModule(l2)) tensor of shape (11, 11) with 0 samples

Tensor M : M(LinearGrowingModule(l2)) tensor of shape (11, 5) with 0 samples

Optimal delta layer : Linear(in_features=10, out_features=5, bias=True)

Extended input layer : None

Extended output layer : None

LinearMergeGrowingModule module.

Previous modules : [LinearMergeGrowingModule module with no previous modules and 2 next modules., LinearGrowingModule(LinearGrowingModule(l2))(in_features=10, out_features=5, use_bias=True)]

Next modules : [LinearGrowingModule(LinearGrowingModule(l3))(in_features=5, out_features=1, use_bias=True), LinearGrowingModule(LinearGrowingModule(l4))(in_features=5, out_features=10, use_bias=True)]

Post merge function : ReLU()

Allow growing : False

Store input : False

Store activity : 0

Tensor S : S(LinearMergeGrowingModule(res)) tensor of shape (6, 6) with 0 samples

Previous tensor S : S[-1](LinearMergeGrowingModule(res)) tensor of shape (11, 11) with 0 samples

Previous tensor M : M[-1](LinearMergeGrowingModule(res)) tensor of shape (11, 5) with 0 samples

LinearGrowingModule(l3) module with 6 parameters.

Layer : Linear(in_features=5, out_features=1, bias=True)

Post layer function : Identity()

Allow growing : False

Store input : False

self._internal_store_input=False

Store pre-activity : False

self._internal_store_pre_activity=False

Tensor S (internal) : S(LinearGrowingModule(l3)) tensor of shape (6, 6) with 0 samples

Tensor S : S(LinearMergeGrowingModule(res)) tensor of shape (6, 6) with 0 samples

Tensor M : M(LinearGrowingModule(l3)) tensor of shape (6, 1) with 0 samples

Optimal delta layer : Linear(in_features=5, out_features=1, bias=True)

Extended input layer : None

Extended output layer : None

LinearGrowingModule(l4) module with 60 parameters.

Layer : Linear(in_features=5, out_features=10, bias=True)

Post layer function : ReLU()

Allow growing : False

Store input : False

self._internal_store_input=False

Store pre-activity : False

self._internal_store_pre_activity=False

Tensor S (internal) : S(LinearGrowingModule(l4)) tensor of shape (6, 6) with 0 samples

Tensor S : S(LinearMergeGrowingModule(res)) tensor of shape (6, 6) with 0 samples

Tensor M : M(LinearGrowingModule(l4)) tensor of shape (6, 10) with 0 samples

Optimal delta layer : Linear(in_features=5, out_features=10, bias=True)

Extended input layer : None

Extended output layer : None

LinearGrowingModule(l5) module with 11 parameters.

Layer : Linear(in_features=10, out_features=1, bias=True)

Post layer function : Identity()

Allow growing : False

Store input : False

self._internal_store_input=False

Store pre-activity : False

self._internal_store_pre_activity=False

Tensor S (internal) : S(LinearGrowingModule(l5)) tensor of shape (11, 11) with 0 samples

Tensor S : S(LinearGrowingModule(l5)) tensor of shape (11, 11) with 0 samples

Tensor M : M(LinearGrowingModule(l5)) tensor of shape (11, 1) with 0 samples

Optimal delta layer : Linear(in_features=10, out_features=1, bias=True)

Extended input layer : None

Extended output layer : None

LinearMergeGrowingModule module.

Previous modules : [LinearGrowingModule(LinearGrowingModule(l3))(in_features=5, out_features=1, use_bias=True), LinearGrowingModule(LinearGrowingModule(l5))(in_features=10, out_features=1, use_bias=True)]

Next modules : []

Post merge function : Identity()

Allow growing : False

Store input : False

Store activity : 0

Tensor S : S(LinearMergeGrowingModule(end)) tensor of shape (2, 2) with 0 samples

Previous tensor S : S[-1](LinearMergeGrowingModule(end)) tensor of shape (17, 17) with 0 samples

Previous tensor M : M[-1](LinearMergeGrowingModule(end)) tensor of shape (17, 1) with 0 samples

Total running time of the script: (0 minutes 0.027 seconds)