What’s new#

“Enhancements” for new features

“Bugs” for bug fixes

“API changes” for backward-incompatible changes

Develop branch#

Enhancements#

Update GrowingBlock to include recently added features in GrowingModule such as in_neurons property, target_in_neurons parameter, and methods for multi-step growth processes (#186 by Théo Rudkiewicz)

Add in_neurons property and target_in_neurons parameter to GrowingModule, LinearGrowingModule, and Conv2dGrowingModule for tracking neuron counts during growth. Add missing_neurons, number_of_neurons_to_add, and complete_growth methods to simplify multi-step growth processes (#187 by Théo Rudkiewicz)

Add new normalization methods (#185 by Théo Rudkiewicz)

Update output_volume in Conv2dMergeGrowingModule based on post_merge_function and reshaping (#177 by Stella Douka)

Implement lazy loading datasets that read directly from the disk (#169 by Stella Douka)

Modify in_channels and out_channels as properties in Conv2dGrowingModule (#174 by Stella Douka)

Introduce a SequentialGrowingContainer structure specialized for container with sequential layers. Introduce a ResNetBasicBlock class to create resnet 18/34 like structure with growable blocks and the possibility of adding blocks. (#168 by Théo Rudkiewicz)

Allow to create layer extension with different simple initialization (different random and zero). (#165 by Théo Rudkiewicz)

Add TensorStatisticWithEstimationError and corresponding class TestTensorStatisticWithEstimationError. It computes an estimation of the quadratic error done when estimating the given tensor statistic. Modify TensorStatistic so that there is no need to call init (#149 by `Félix Houdouin`_).

Add a method normalize_optimal_updates in GrowingModule to normalize the optimal weight updates before applying them (#164 by Théo Rudkiewicz)

Add setter for scaling factor in GrowingModule (#157 by Stella Douka)

Minor improvements of GrowingContainer (#161 by Théo Rudkiewicz)

Add in_features and out_features properties to GrowingModule and LinearGrowingModule (#160 by Théo Rudkiewicz)

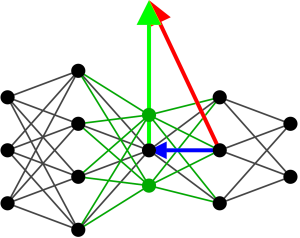

Add support for convolutional DAGs in GrowingDAG and GrowingGraphNetwork (#148 by Stella Douka)

Handle previous and next layers when deleting GrowingModule and MergeGrowingModule objects (#148 by Stella Douka)

Add weights_statistics method to GrowingModule and GrowingContainer to retrieve statistics of weights in all growing layers. (#152 by Théo Rudkiewicz)

Add ruff linter to pre-commit hooks and to the CI (#151 by Théo Rudkiewicz)

Add GrowingBlock to mimic a ResNet 18/34 block. (#106 by Théo Rudkiewicz)

fix(RestrictedConv2dGrowingModule.bordered_unfolded_extended_prev_input): Use the correct input size to compute the border effect of the convolution. (#147 by Théo Rudkiewicz)

Create a input_size property in GrowingModule. (#143 by Théo Rudkiewicz)

Improve GrowingContainer to allow GrowingContainer as submodules (#133 by Théo Rudkiewicz and Stella Douka).

Fix sign errors in compute_optimal_added_parameters when using tensor_m_prev and in tensor_n computation. Add unit tests to cover these cases (#118 and #115 by Théo Rudkiewicz).

Estimate dependencies between activities for faster expansion (#100 by Stella Douka)

Makes flattening of input optional in GrowingMLP. Default value is True for backward compatibility (#108 by Stéphane Rivaud).

Add the option to handle post layer function that need to grow like BatchNorm (#105 by Théo Rudkiewicz).

Add robust compute_optimal_delta function to gromo.utils.tools with comprehensive dtype handling, automatic LinAlgError fallback to pseudo-inverse, float64 retry mechanism for negative decrease scenarios, and extensive test suite achieving 95% coverage. Function computes optimal weight updates for layer expansion using mathematical formula dW* = M S⁻¹ with full backward compatibility (#114 by Stéphane Rivaud)

Refactor GrowingModule to centralize tensor_s_growth handling (#109 by Stéphane Rivaud)

Add use_projected_gradient parameter to growing modules to control whether to use projected gradient (tensor_n) or raw tensor (tensor_m_prev) for computing new neurons. This provides more flexibility in the optimization strategy and improves test coverage for critical code paths (#104 by Théo Rudkiewicz).

Fix statistics normalization in LinearGrowingModule (#110 by Stéphane Rivaud)

Implement systematic test coverage improvement initiative achieving 92% → 95% overall coverage through 4-phase strategic enhancement targeting critical modules: utils.py (80% → 96%), tools.py (78% → 98%), and growing_module.py (92% → 94%). Added 27 comprehensive test methods covering multi-device compatibility, error handling paths, mathematical algorithm edge cases, and abstract class testing via concrete implementations (#113 by Stéphane Rivaud).

Fix the tensor_n computation in RestrictedConv2dGrowingModule (#103 by Théo Rudkiewicz).

Add GrowingBatchNorm1d and GrowingBatchNorm2d modules to support batch normalization in growing networks (#101 by Théo Rudkiewicz).

Implemented Conv2dMergeGrowingModule and added support for computing number of parameters in Conv2dGrowingModule (#94 by Stella Douka)

Optimize RestrictedConv2dGrowingModule to fasten the simulation of the side effect of a convolution (#99 by Théo Rudkiewicz).

Split Conv2dGrowingModule into two subclass FullConv2dGrowingModule`(that does the same as the previous class) and `RestrictedConv2dGrowingModule (that compute only the best 1x1 convolution as the second layer at growth time) (#92 by Théo Rudkiewicz).

Code factorization of methods compute_optimal_added_parameters and compute_optimal_delta that are now abstracted in the GrowingModule class. (#87 by Théo Rudkiewicz).

Stops automatically computing parameter update in Conv2dGrowingModule.compute_optimal_added_parameters`to be consistent with `LinearGrowingModule.compute_optimal_added_parameters (#87 by Théo Rudkiewicz) .

Adds a generic GrowingContainer to simplify model management along with unit testing. Propagates modifications to models. (#77 by Stéphane Rivaud)

Refactor and simplify repo structure (#72 and #73 by Stella Douka)

Simplify global device handling (#72 by Stella Douka)

Integrate an MLP Mixer (#70 by Stéphane Rivaud)

Integrate a Residual MLP (#69 by Stéphane Rivaud)

Option to restrict action space (#60 by Stella Douka)

Add support for Conv2d layers in the sequential case (#34 by Théo Rudkiewicz)

Replaced the assert statements with self.assert* methods in the unit tests (#50 by Théo Rudkiewicz)

Reduce unit tests computational load, add parametrized unit tests (#46 by Sylvain Chevallier)

Add the possibly to separate S for natural gradient and S for new weights (#33 by Théo Rudkiewicz)

Added GPU tracking (#16 by Stella Douka)

Added Bayesian Information Criterion for selecting network expansion (#16 by Stella Douka)

Unified documentation style (#14 by Stella Douka)

Updated Unit Tests (#14 by Stella Douka)

Option to disable logging (#14 by Stella Douka)

Add CI (#2 by Sylvain Chevallier)

Modify LinearGrowingModule to operate on the last dimension of an input tensor with arbitrary shape (#54 by Stéphane Rivaud)

Bugs#

Fix sub-modules that are not registered in pytorch (#179 by Stella Douka)

Fix persistent value of input volume (#174 by Stella Douka)

Fix memory leak in tensor updates (#138 by Stella Douka)

Device handling in GrowingMLP, GrowingMLPMixer, and GrowingResidualMLP (#129 by Stella Douka)

Delete leftover activity tensors (#78 & 100 by Stella Douka)

Fix inconsistency with torch.empty not creating empty tensors (#78 by Stella Douka)

Expansion of existing nodes not executed in GrowingDAG (#78 by Stella Douka)

Fix the computation of optimal added neurons without natural gradient step (#74 by Stéphane Rivaud)

Fix the data type management for growth related computations. (#79 by Stéphane Rivaud)

Revert global state changes, solve test issues (#70 by Stella Douka)

Fix the data augmentation bug in get_dataset (#58 by Stéphane Rivaud)

Use a different scaling factor for input and output extensions. In addition,

apply_changeandextended_forwardhave now compatible behavior in terms of scaling factor. (#48 by Théo Rudkiewicz)Fix the change application when updating the previous layer (#48 by Théo Rudkiewicz)

Fix the sub-selection of added neurons in the sequential case (#41 by Théo Rudkiewicz)

Correct codecov upload (#49 by Sylvain Chevallier)

Fix dataset input_shape: remove the flattening in data augmentation (#56 by Stéphane Rivaud)

Fix memory leak from issue #96 (#97 by Théo Rudkiewicz)

API changes#

Allow growth between two GrowingDAG modules (#148 & #179 by Stella Douka)

Apply all candidate expansions on the same GrowingDAG without deepcopy (#148 by Stella Douka)

Moved compute_optimal_delta function from LinearMergeGrowingModuke to MergeGrowingModule (#94 by Stella Douka)

Renamed AdditionGrowingModule to MergeGrowingModule for clarity (#84 by Stella Douka)

Added support for configuration files that override default class arguments (#38 by Stella Douka)