gromo.growing_module.AdditionGrowingModule#

- class gromo.growing_module.AdditionGrowingModule(post_addition_function: Module = Identity(), previous_modules: list[AdditionGrowingModule | GrowingModule] = None, next_modules: list[AdditionGrowingModule | GrowingModule] = None, allow_growing: bool = False, tensor_s_shape: tuple[int, int] = None, device: device | None = None, name: str = None)[source]#

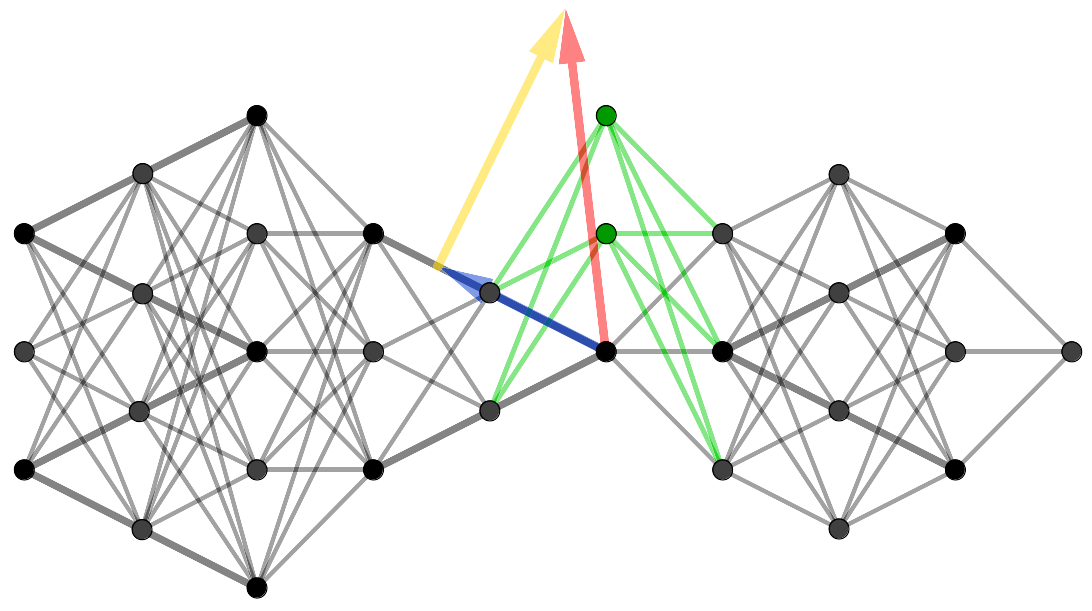

Module to connect multiple modules with an addition operation. This module does not perform the addition operation, it is done by the user.

- add_next_module(module: AdditionGrowingModule | GrowingModule) None[source]#

Add a module to the next modules of the current module.

- Parameters:

module – next module to add

- add_previous_module(module: AdditionGrowingModule | GrowingModule) None[source]#

Add a module to the previous modules of the current module.

- Parameters:

module – previous module to add

- compute_previous_m_update() tuple[Tensor, int][source]#

Compute the update of the tensor M for the input of all previous modules.

- Returns:

torch.Tensor – update of the tensor M

int – number of samples used to compute the update

- compute_previous_s_update() tuple[Tensor, int][source]#

Compute the update of the tensor S for the input of all previous modules.

- Returns:

torch.Tensor – update of the tensor S

int – number of samples used to compute the update

- compute_s_update() tuple[Tensor, int][source]#

Compute the update of the tensor S. Should be added to the type of layer.

- Returns:

torch.Tensor – update of the tensor S

int – number of samples used to compute the update

- delete_update(include_previous: bool = False) None[source]#

Delete the update of the optimal added parameters.

- forward(x: Tensor) Tensor[source]#

Define the computation performed at every call.

Should be overridden by all subclasses.

Note

Although the recipe for forward pass needs to be defined within this function, one should call the

Moduleinstance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.

- parameters(recurse: bool = True) Iterator[Parameter][source]#

Return an iterator over module parameters.

This is typically passed to an optimizer.

- Parameters:

recurse (bool) – if True, then yields parameters of this module and all submodules. Otherwise, yields only parameters that are direct members of this module.

- Yields:

Parameter – module parameter

Example:

>>> # xdoctest: +SKIP("undefined vars") >>> for param in model.parameters(): >>> print(type(param), param.size()) <class 'torch.Tensor'> (20L,) <class 'torch.Tensor'> (20L, 1L, 5L, 5L)

- projected_v_goal() Tensor[source]#

Compute the projected gradient of the goal with respect to the activity of the layer.

dLoss/dA_proj := dLoss/dA - dW B[-1] where A is the pre-activation vector of the layer, and dW the optimal delta for all the previous layers

- Returns:

projected gradient of the goal with respect to the activity of the next layer dLoss/dA - dW B[-1]

- Return type:

torch.Tensor

- set_next_modules(next_modules: list[AdditionGrowingModule | GrowingModule]) None[source]#

Set the next modules of the current module.

- Parameters:

next_modules – list of next modules

- set_previous_modules(previous_modules: list[AdditionGrowingModule | GrowingModule]) None[source]#

Set the previous modules of the current module.

- Parameters:

previous_modules – list of previous modules

- sum_in_features(with_bias: bool = False) int[source]#

Count total in_features of previous modules

- Returns:

sum of previous in_features

- Return type: